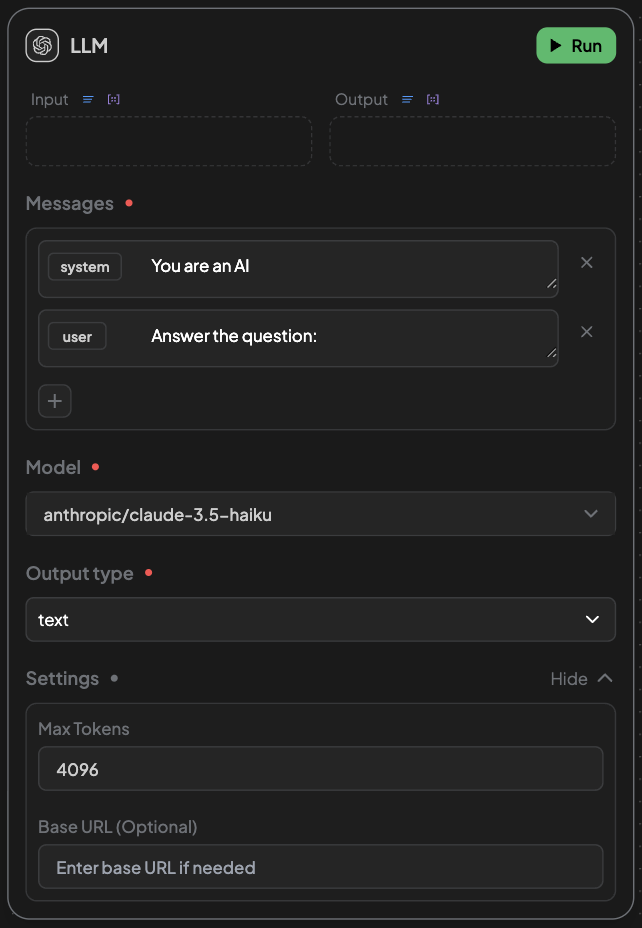

LLM Edge

Use this edge to interact with large language models (LLMs) via API or locally hosted instances.

Purpose:

- Generate text outputs from structured or unstructured input.

- Answer questions, summarize, or rewrite content.

Inputs:

- A text block or structured block with relevant information.

Outputs:

- A text block with the LLM's response.

Configuration:

- Messages: Prompt that include system and user roles. You can refer to input blocks using double curly braces (e.g.

{{block_label}}). Click to copy the input block's label and paste in the prompt. - Output type: Default set to

text, can choose to enable structured output to let the LLM output contents in a JSON object. - Model: Select a good model to use.

- Settings: Token limit and Base URL.

Available Models:

- OpenAi:

o1-mini,o1,o1-pro,o3-mini,o3-mini-high,gpt-4-turbo,gpt-4.5-preview,gpt-4o-mini,gpt-4o - Anthropic:

claude-3.5-haiku,claude-3.5-sonnet,claude-3.7-sonnet - DeepSeek:

deepseek-v3,deepseek-r1 - Localhost: Any model that has depolyed in your local server and has connected to PuppyAgent.